Collecting Feedback & Measuring the Success of a Design System

How do you know if your Design System is actually working well for people and providing value for the company? This is a really complex topic, and I have a few ideas. I'd love to hear some of yours too! Let's chat on LinkedIn or Bluesky.

In a previous post I mentioned how you should productize your Design System. Every product wants customers - it helps them get funding and succeed. The same applies here. It's important to show the value your Design System is bringing to your customers - your colleagues. Measuring success and collecting feedback go hand-in-hand. Not only should you be measuring success, you also need the feedback from people using your Design System so that it can improve.

Measuring is hard, collecting feedback is easier.

To me, collecting feedback is easier than measuring success because it's more direct. I feel as though measuring success can be really difficult, because collecting metrics can be tricky. Reviewing the metrics and coming to conclusions without being biased is also difficult. This is exactly why I wrote this article! Without further ado, here are some ideas I've come up with around measuring success and collecting feedback.

"Customer Satisfaction"

This is where I like to start - it's similar to being in sales. The sales department's job is to interact with you and convince you to buy their product to fulfill your needs. In this case, you are the sales person meeting with your fellow colleagues and convincing them your Design System will help them at their job. Pitch the Design System to them if they aren't using it already. "Get in the field" with them. See how they are doing their work currently and offer your Design System as a potential improvement. At a minimum, see what feedback they have about your Design System. Hopefully you'll sell them on it!

One of my favorite things to do after selling folks on a Design System is meet with both designers and engineers as they work on a new product or feature. Give them access to what they need in Figma and start with the design side of things. Sit with them as they begin to work through the process of building. Ask questions along the way about their thinking and how they feel things are going. Then go do the same thing with engineers - give them access to your Design System library. Was the process smooth overall? Did it accelerate their development at all? If yes, nice! If not, you'll have some feedback to make things better for next time.

Holding office hours is another great way to measure "customer satisfaction". Having dedicated time for people to ask questions or even just hang out and talk about your Design System is a great way to spread the word about your work, while also getting additional feedback in a casual way. It's similar to above as well, where it could be a pairing session that reveals improvement areas for your team.

Adoption

Another metric I like to use is measuring adoption. Before measuring adoption though, I have a piece of advice: Don't make using your Design System a requirement. It should be optional and left up to the teams building the product/feature. But why?!

Say you're at a company with an existing Design System and a group wants to spin up a new product. If they immediately reach for using the Design System, that's a huge win for your team. It means they see the value in using it versus starting completely from scratch. This is why I prefer making the Design System optional - you want it to be so good that people want to use it for every single project because it saves them time and effort.

Another good adoption metric is the case where there's an existing product not currently using your Design System. They end up adopting it. That's huge! This means that team sees the value your Design System is providing.

These are more "long term" or "long feedback loop" metrics. Not every organization spins up new products at a rapid pace. That's okay. I think it's one of those items to celebrate when it does happen though. What are some other ways we can measure adoption of our Design System that have a shorter feedback loop?

Component Usage Metrics

I like collecting data around how many times each component is used in a codebase (and even across codebases!). It ties into adoption, and allows you to see over time which components are leading the pack. It can help provide information later on to UX Researchers so they can have a better understanding of patterns in your products. It helps your team understand which patterns are most used across products. This is a great metric around adoption.

Collecting data for the number of components used in a project doesn't necessarily mean the Design System is successful. "Adoption" and "Success" are related, but just because someone uses your Design System, doesn't necessarily mean your Design System is successful. It does help the overall process of maintaining and developing a Design System though - it's a great way to collect additional feedback and make improvements.

If you release a new component and after a few months you notice that it's not getting used as frequently as you were hoping, that's feedback unlocked by simply keeping track of usage data. You can then figure out why that is and make adjustments.

I had brainstormed this idea with my amazing wife around 2020, but never had enough time to implement it. She ended up building something similar and it's been working out well for her team. Here's the gist:

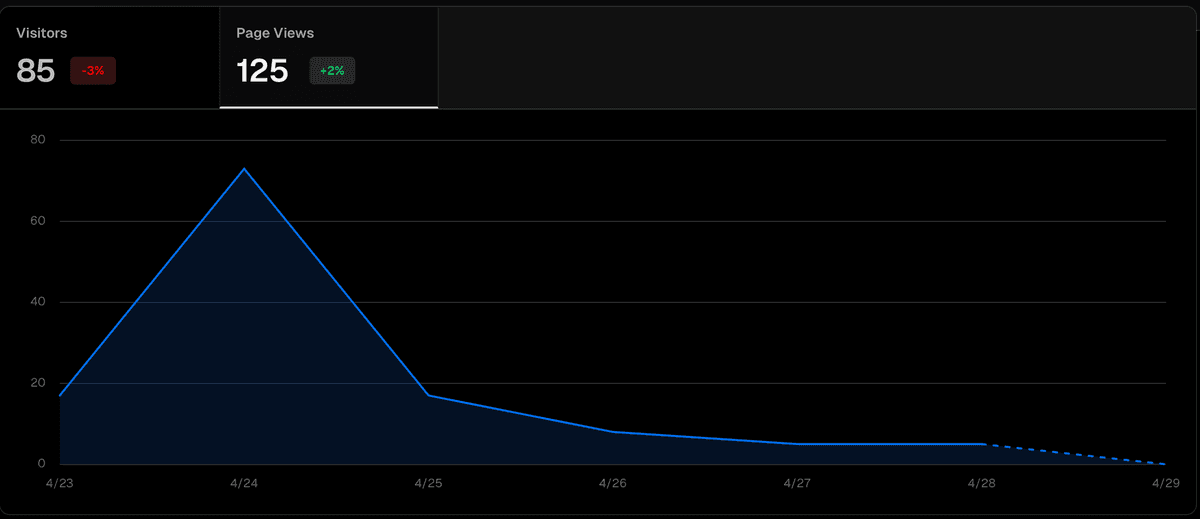

- At some cadence, query for usages of your Design System components (using GitHub APIs, for example).

- Keep track of how many times each component is used across each repository.

- Store this data somewhere (in a dynamo table, S3, Tableau, etc.) you can run queries on it in the future.

- At some cadence, weekly/monthly, post the usage stats (it'd be ideal to build a UI surfacing this data with nice charts).

- Review the data as a team.

Collecting data allows you to ask questions. Are the numbers going up or down? Is adoption of a component fairly low? Why could that be? Does that component need additional functionality? Do folks not know when to use that particular component? These are great examples of questions that come up around your Design System - and you can only ask these questions if you collect the data!

Component Property Usage Metrics

Similar to above, but see how frequenty certain props are used for components.

If something isn't being used, you can begin asking questions about why that may be. Do the designs not call for that functionality as much as you had thought? Does it not have a good UX? Are there accessibility issues? Do people not know about it? Was this added by an engineer, but isn't in Figma, so no designers know about it?

This also helps if you need to deprecate usage of a prop at some point. You'll have the data to help you decide if it's a good idea and what the impact to users will be. Maybe you'll write a codemod to auto-migrate folks to a new property name you want to use instead. Having data around how your users are using your components is extremely helpful when it comes to maintaining and improving a Design System.

User Interviews and Anonymous Surveys

User interviews can be really helpful to get feedback on areas to improve. I suggest scheduling them with folks who have been using your Design System for a few months, at least. It's enough time where they may still remember their "I'm new" feedback, while also working with it long enough to offer suggestions on what could be improved to help their day-to-day. I also suggest interviewing folks from different levels - the more junior folks may have feedback that is completely different than the more senior people. This makes sure you cover a wide spectrum.

User interviews are great, but there's another way to get feedback as well. People love leaving reviews. Take a look at Google and Amazon reviews. But in a professional setting, sometimes people don't feel as though they can be as open or honest as when they review their cool wolf t-shirt. This is totally understandable!

Not everyone wants to tell you to your face (or via Slack) that the component you made sucks or has issues. It can be difficult for folks to bring up. But if you provide an anonymous way for folks to provide feedback, it can give a chance for those voices to be heard in a safe space.

We want all of the feedback - positive, negative, and somewhere in between. Offering many avenues to get to this information is key. I typically use a Google Form. They're quick to setup and most folks are familiar with the UI/UX of Google products already.

Accessibility Metrics

Does using your Design System improve an accessibility ("a11y") audit? A metric I've used in the past is the case where you introduce a Design System into an existing product. If your audit score improves, that's great. Another win for your Design System. After all, I firmly believe Design Systems should have as much accessibility baked in as possible. This topic deserves an article by itself!

There are a ton of tools to help measure accessibility in your products. Here are some I recommend:

- Follow the ARIA Authoring Patterns Guide and Practices Guide.

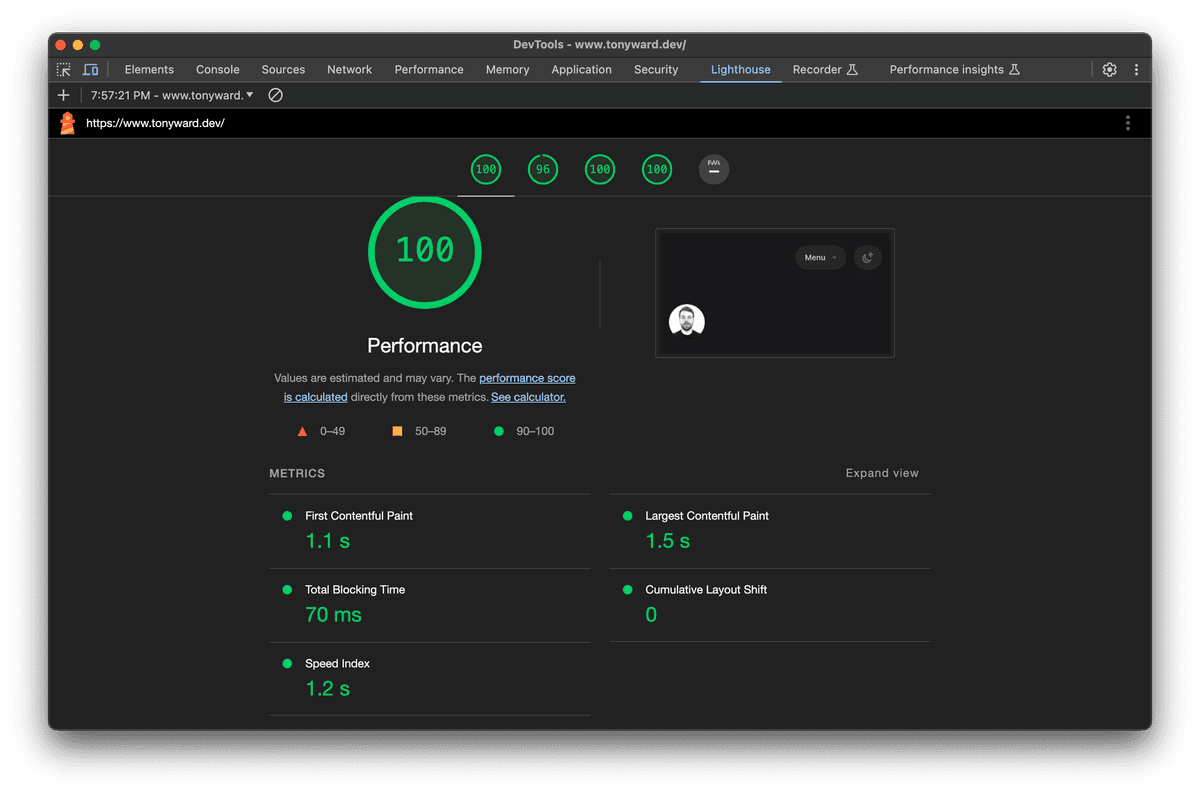

- Lighthouse is a fine place to begin your a11y improvement journey. If you're already using Chrome, it's really easy to use! (It also ties into my performance point below.)

- axe DevTools for Chrome is a great extension that provides a ton of great a11y information. If you're already using Chrome, it's another no brainer to install.

- axe-core allows you to write integration or end-to-end tests and verify accessibility programmatically.

- pa11y is another great tool to use that's similar to

axe-core. I haven't used it yet, but I've been keeping an eye on it for a while now and have heard excellent things. - If you're using Storybook, their accessibility addon shows a11y issues right in your story. Convenient!

Web Performance Metrics

Does using your Design System improve the web performance of your application? You could start by using a Lighthouse score. I'm not sure how much weight this metric should carry, but it could be interesting to see how your Design System would affect performance.

Bug Metrics

When folks begin adopting your Design System, do less bugs appear in the product? The hope would be that the application would become more stable and have less bugs over a longer period of time as adoption increases. This is another "long term measurement" that may pay off over time, but could be hard to get data from in the short term.

One case I've seen is where there's a photo upload component in a product that's shared across the application. In my case, the existing component was quite buggy, had some UI/UX issues around drag and drop, and wasn't accessible. The Design System team I was on built a new component for this use case. It handled receiving photos from the file system, supported drag and drop, supported keyboard and was friendly to screenreaders, and had a nice horizontal scroll-snap behavior. When we replaced the existing component with our new one, we immediately deleted about 5 bug tickets in the backlog.

Of course our team took the time and effort to build this component, which could have been spent resolving the bugs directly; however, at the end of our task, the Design System team provided a design approved™ component, with better UI/UX, better tests, and made it accessible via keyboard and screen readers. It could also be used across multiple features and products. The product team was also very happy we took on the work to build that particular component and increased our overall relationship with the team. That's a win in my book!

Documentation Site Analytics

Keeping an eye on analytics for your documentation site is a great way to get information on your Design System. Are people regularly visiting certain pages? Is that component frequently used or are they having issue with that component and diving in to learn more? This is all great information to have available! Using information from interviews and the methods listed above, coupled with the documentation site information, you may learn about additional areas for improvement.

That's All, Folks

Well, if you made it this far, thank you for reading my ramblings! As mentioned at the beginning, measuring success can be difficult, but it's paramount to the success of your Design System. Collecting feedback also creates improvement opportunities and is equally as important. These are all the ideas that come to mind to me around those two areas. What did I miss? Let's chat on LinkedIn or Bluesky. Cheers!

Tony Ward

Tony Ward